UAVSAR

Contents

UAVSAR¶

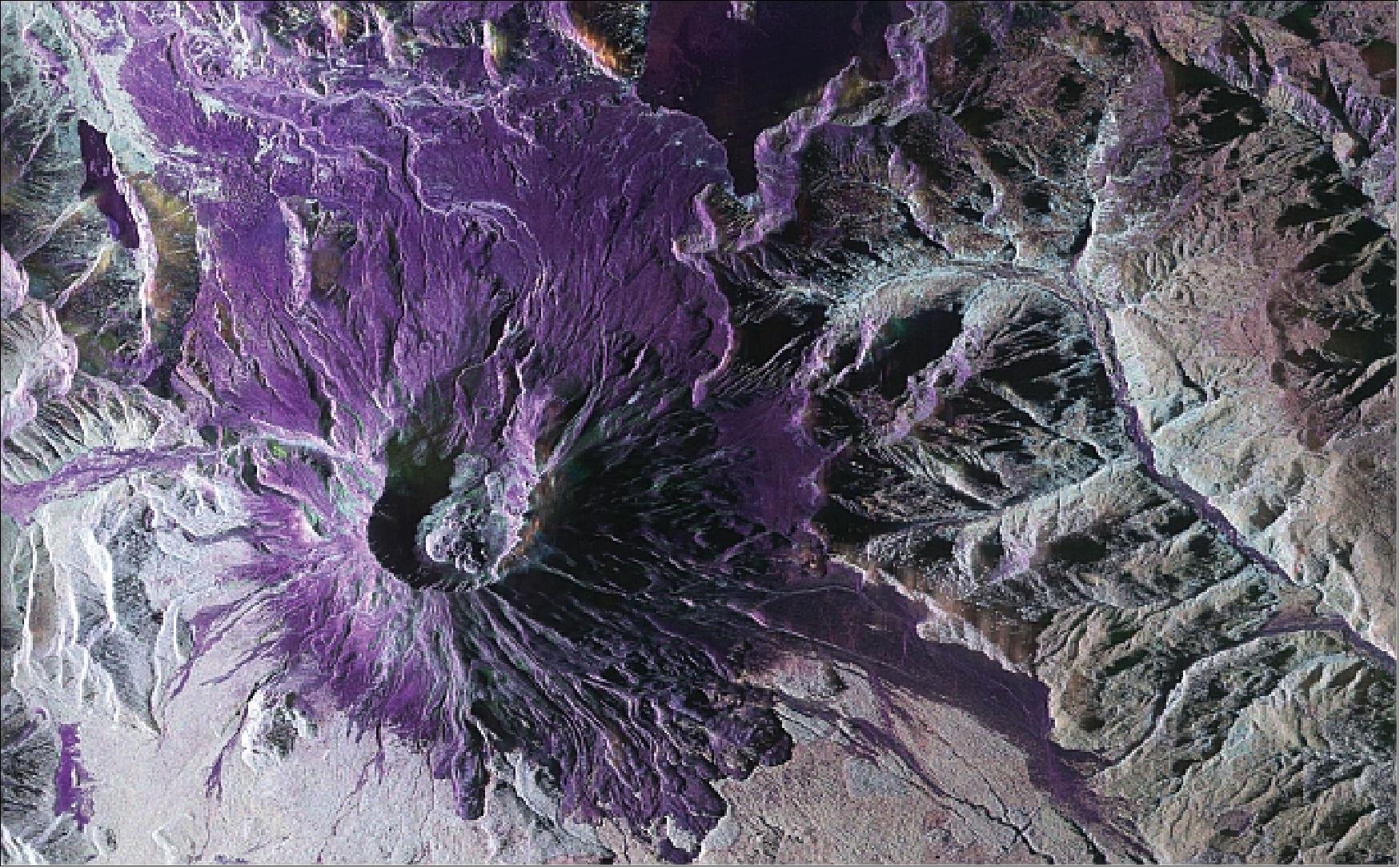

Fig. 1 UAVSAR polarimetry imagery of Mt. St Helens. Source: Jones et al. 2008¶

Developers:

Jack Tarricone, University of Nevada, Reno

Zach Keskinen, Boise State University

Other contributors:

Ross Palomaki, Montana State University

Naheem Adebisi, Boise State University

What is UAVSAR?¶

UAVSAR is a low frequency plane-based synthetic aperture radar. UAVSAR stands for “Uninhabited Aerial Vehicle Synthetic Aperture Radar”. It captures imagery using a L-band radar. This low frequency means it can penetrate into and through clouds, vegetation, and snow.

frequency (cm) |

resolution (rng x azi m) |

Swath Width (km) |

Polarizations |

Launch date |

|---|---|---|---|---|

L-band 23 |

1.8 x 5.5 |

16 |

VV, VH, HV, HH |

2007 |

NASA SnowEx 2020 and 2021 UAVSAR Campaigns¶

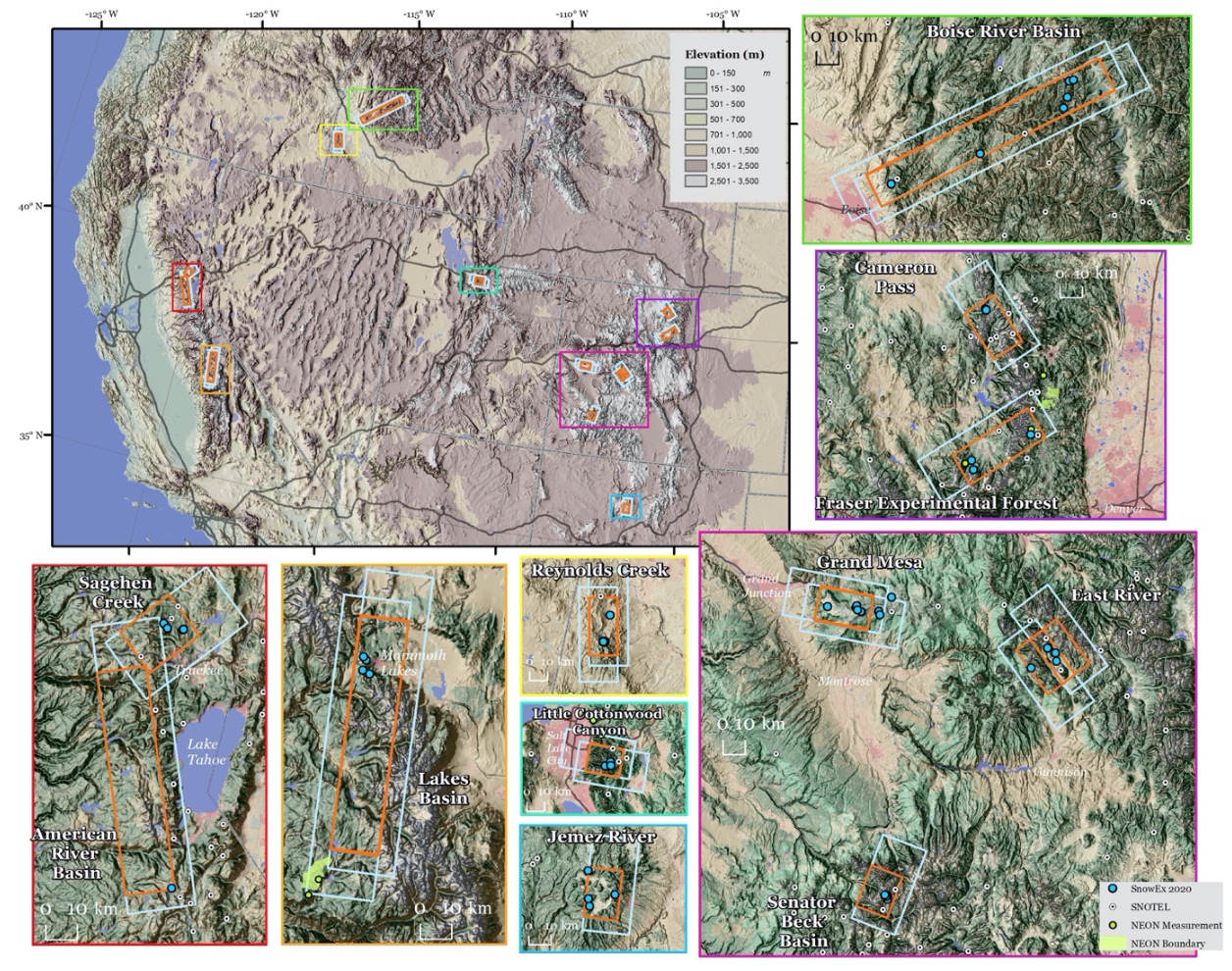

During the winter of 2020 and 2021, NASA conducted an L-band InSAR timeseries across the Western US with the goal of tracking changes in SWE. Field teams in 13 different locations in 2020, and in 6 locations in 2021, deployed on the date of the flight to perform calibration and validation observations.

Fig. 2 Map of the UAVSAR flight locations for NASA SnowEx. Note that the Montana site (Central Agricultural Research Center) is not on this map. Source: Chris Hiemstra¶

The site locations from the above map along with the UAVSAR defined campaign name and currently processed pairs of InSAR images for each site. Note that the image pair count may contain multiple versions of the same image and may increase as more pairs of images are processed by JPL. Also note that the Lowman campaign name is the wrong state when searching.

Site Location |

Campaign Name |

Image Pairs |

|---|---|---|

Grand Mesa |

Grand Mesa, CO |

13 |

Boise River Basin |

Lowman, CO |

17 |

Frazier Experimental Forest |

Fraser, CO |

16 |

Senator Beck Basin |

Ironton, CO |

9 |

East River |

Peeler Peak, CO |

4 |

Cameron Pass |

Rocky Mountains NP, CO |

15 |

Reynold Creek |

Silver City, ID |

1 |

Central Agricultral Research Center |

Utica, MT |

2 |

Little Cottonwoody Canyon |

Salt Lake City, UT |

21 |

Jemez River |

Los Alamos, NM |

3 |

American River Basin |

Eldorado National Forest, CA |

4 |

Sagehen Creek |

Donner Memorial State Park, CA |

4 |

Lakes Basin |

Sierra National Forest, CA |

3 |

Why would I use UAVSAR?¶

UAVSAR works with low frequency radar waves. These low frequencies (< 3 GHz) can penetrate clouds and maintain coherence (a measure of radar image quality) over long periods. For these reasons, time series was captured over 13 sites as part of the winter of 2019-2020 and 2020-2021 for snow applications. Additionally the UAVSAR is awesome!

Accessing UAVSAR Images¶

UAVSAR imagery can be downloaded from both the JPL and Alaska Satellite Facility. However both provide the imagery in a binary format that is not readily usable or readable by GIS software or python libraries.

Data Download and Conversion with uavsar_pytools¶

uavsar_pytools (Github) is a Python package developed out of work started at SnowEx Hackweek 2021. It nativiely downloads, formats, and converts this data in analysis ready rasters projected in WSG-84 Lat/Lon (EPSG:4326. The data traditionally comes in a binary format, which is not injestible by traditional geospatial analysis software (Python, R, QGIS, ArcGIS). It can download and convert either individual images - UavsarScene or entire collections of images - UavsarCollection.

Netrc Authorization¶

In order to download uavsar images you will need a netrc file that contains your earthdata username and password. If you need to register for a NASA earthdata account use this link. A netrc file is a hidden file, it won’t appear in the your file explorer, that is in your home directory and that programs can access to get the appropriate usernames and passwords. While you’ll have already done this for the Hackweek virtual machines, uavsar_pytools has a tool to create this netrc file on a local computer. You only need to create this file once and then it should be permanently stored on your computer.

# ## Creating .netrc file with Earthdata login information

# from uavsar_pytools.uavsar_tools import create_netrc

# # This will prompt you for your username and password and save this

# # information into a .netrc file in your home directory. You only need to run

# # this command once per computer. Then it will be saved.

# create_netrc()

Downloading and converting a single UAVSAR interferogram scene¶

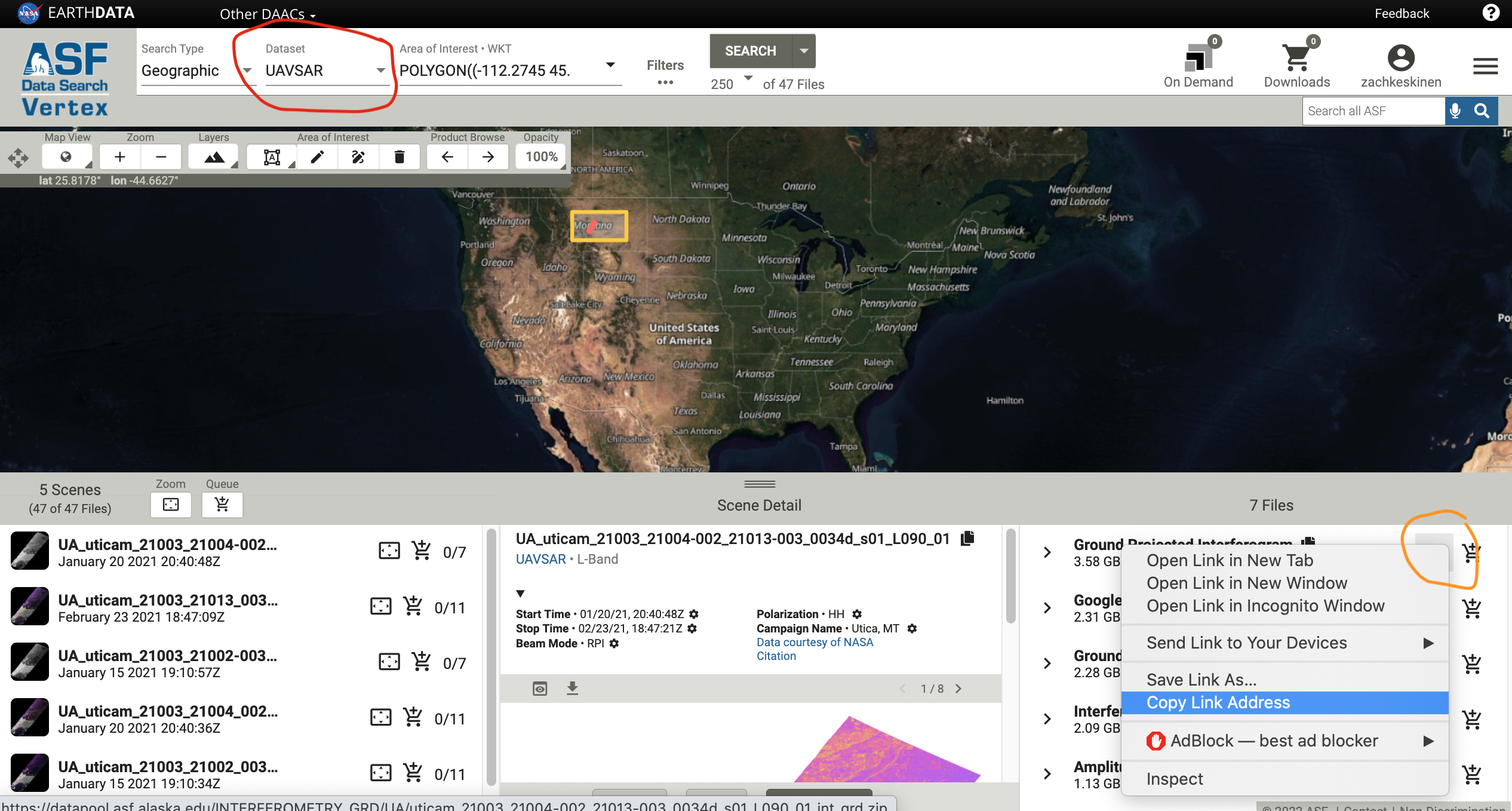

You can find urls for UAVSAR images at the ASF vertex website. Make sure to change the platform to UAVSAR and you may also want to filter to ground projected interferograms.

Fig. 3 Example of getting the UAVSAR URL from vertex.¶

try:

from uavsar_pytools import UavsarScene

except ModuleNotFoundError:

print('Install uavsar_pytools with `pip install uavsar_pytools`')

## This is the directory you want to download and convert the images in.

work_dir = '/tmp/uavsar_data'

## This is a url you want to download. Can be obtained from vertex

url = 'https://datapool.asf.alaska.edu/INTERFEROMETRY_GRD/UA/\

lowman_23205_21009-004_21012-000_0007d_s01_L090_01_int_grd.zip'

## clean = True will delete the binary and zip files leaving only the tiffs

scene = UavsarScene(url = url, work_dir=work_dir, clean= True)

## After running url_to_tiffs() you will download the zip file, unzip the binary

## files, and convert them to geotiffs in the directory with the scene name in

## the work directory. It also generate a .csv pandas dictionary of metadata.

# scene.url_to_tiffs()

Downloading and converting a full UAVSAR collection¶

If you want to download and convert an entire Uavsar collection for a larger analysis you can use UavsarCollection. The collection names for the SnowEx campaign are listed in the table in the introduction. The UavsarCollection can download either InSAR pairs and PolSAR images.

from uavsar_pytools import UavsarCollection

## Collection name, the SnowEx Collection names are listed above. These are case

## and space sensitive.

collection_name = 'Grand Mesa, CO'

## Directory to save collection into. This will be filled with directory with

## scene names and tiffs inside of them.

out_dir = '/tmp/collection_ex/'

## This is optional, but you will generally want to at least limit the date

## range between 2019 and today.

date_range = ('2019-11-01', 'today')

# Keywords: to download incidence angles with each image use `inc = True`

# For only certain pols use `pols = ['VV','HV']`

collection = UavsarCollection(collection = collection_name, work_dir = out_dir, dates = date_range)

## You can use this to check how many image pairs have at least one image in

## the date range.

#collection.find_urls()

## When you are ready to download all the images run:

# collection.collection_to_tiffs()

## This will take a long time and a lot of space, ~1-5 gB and 10 minutes per

## image pair depending on which scene, so run it if you have the space and time.